Data in Galaxy JupyterLab

Contents

Data in Galaxy JupyterLab¶

Your Galaxy instance comes with some local storage but everything you create/copy to your local storage will be lost when your instance is terminated or if there is any problems with the Galaxy portal (that may happen more often than you think /want!).

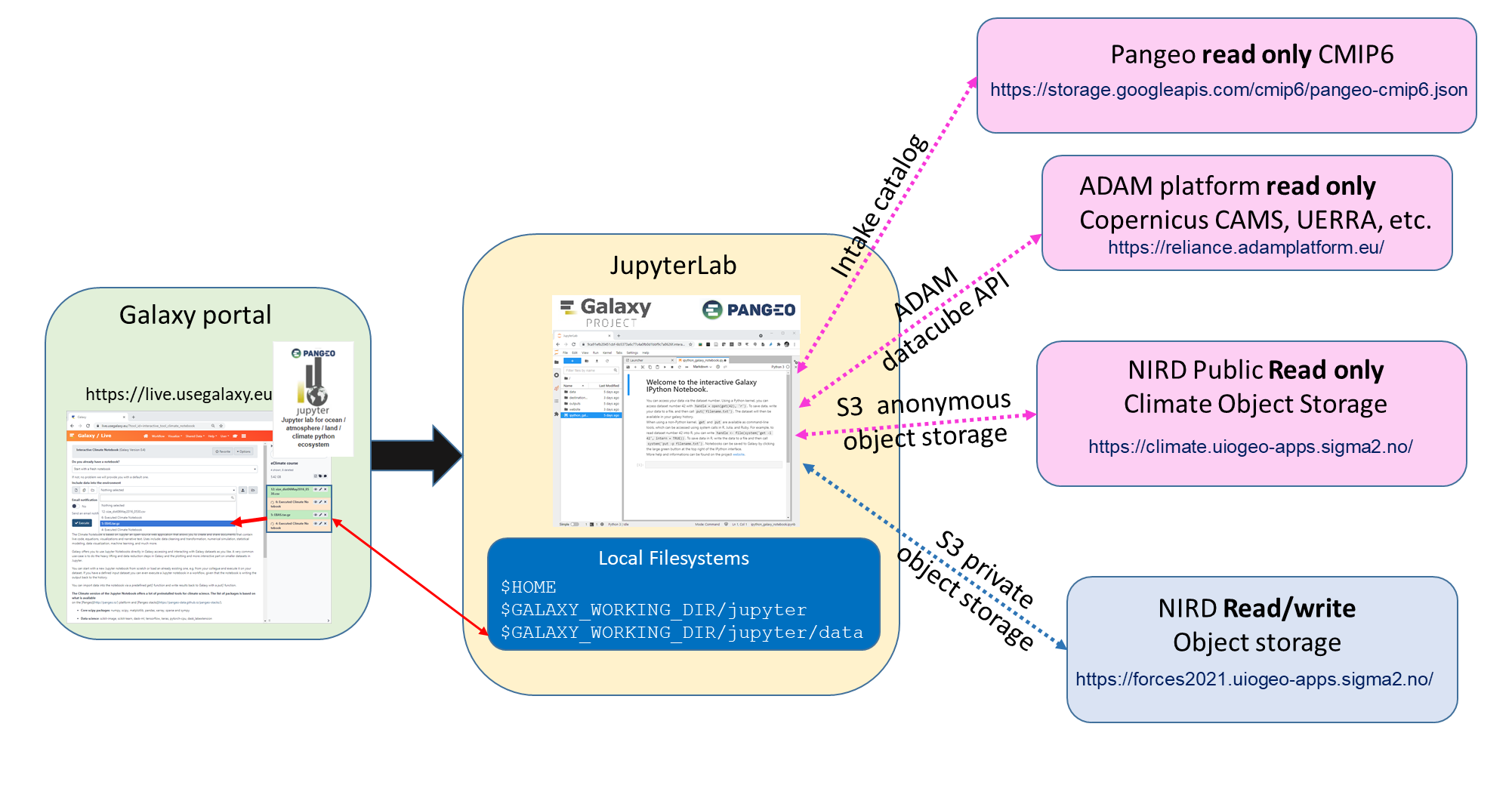

The different storage end points are represented on the figure below:

The dashed lines (blue and magenta) represent data access points that are all external to your Galaxy instance. In fact, there are many more access points where you can get access to data and we only listed the ones that may be relevant for this course.

Pangeo CMIP6: read only and public access (anonymous) to CMIP6 data. For more information, see Pangeo CMIP6 documentation.

Data Cubes from the ADAM Platform: read only and you need to register at https://reliance.adamplatform.eu/. Use RoHUB Sign-in and Login wth EGI Check-in (the best is then to use ORCID). Data can be accessed directly through the ADAM platform or using ADAM python API.

NIRD Public climate Object storage (end point: https://climate.uiogeo-apps.sigma2.no): readonly and anonymous access to climate data stored on the Norwegian Infrastructure for Research Data. xarray, pandas and many other pydata and pangeo python packages can manipulate data on s3-compatible object storage. Connections are made using packages such as s3fs and/or boto3.

NIRD Read/write object storage (end point: http://forces2021.uiogeo-apps.sigma2.no/): access (read and write) to this shared s3-compatible storage requires you to authenticate using credentials provided by your instructors. To make your work as reproducible as possible, you should make sure that files you create locally in your JupyterLab instance are copied/backed-up on NIRD project area (s3 object storage end point:

http://forces2021.uiogeo-apps.sigma2.no/). If you are following this training material outside the course on eScience Tools in Climate Science, you will not get access to this private storage area.

Which data is available?¶

-

ESGF CMIP6 on NIRD s3-compatible:

use when data is not available on Pangeo CMIP6, for instance

AerChemMIP;endpoint:

https://climate.uiogeo-apps.sigma2.no/bucket:

ESGF/CMIP6

MODIS Terra:

some data available on NIRD s3-compatible

endpoint:

https://climate.uiogeo-apps.sigma2.no/bucket:

ESGF/obs4MIPs/MODIS/MODIS6.1terra/

[ERA5]:

some monthly means available on

https://forces2021.uiogeo-apps.sigma2.no/(bucket:data/ERA5/monthly_means/0.25deg/)

-

some data available on NIRD s3-compatible

endpoint:

https://climate.uiogeo-apps.sigma2.no/bucket:

ESGF/obs4MIPs/AERONET/AeronetSunV3Lev1.5.daily/

Contact your group leader if data you need is missing.

How to access data?¶

See example notebooks for usage:

CMIP6 example: this example shows how to manipulate Pangeo CMIP6 data. It uses intake, a lightweight package for finding, investigating, loading and disseminating data and intake-esm and shows how to search, manipulate and visualize CMIP6 data;

Data from nird: this example shows how to access public data stored on NIRD

https://climate.uiogeo-apps.sigma2.no/using s3fs and xarray. The results are saved on the hared NIRD private s3-compatible storage (endpoint:https://forces2021.uiogeo-apps.sigma2.no/). Make sure you update the path (s3_pathin the notebook) to save your results in your own working area (for instances3://work/my-github-username/myfile.zarrwhere you need to set properly your github username). If you have any doubts, check with your group leader to know which folder to use.Read ebas data: this example shows how to use pydap to access remote data via OPENDAP/DODS and how to save your results via s3fs on the shared NIRD private s3-compatible storage (endpoint:

https://forces2021.uiogeo-apps.sigma2.no/). When saving your results/data, check with your group leader to know which folder to use (most like something likes3://work/my-github-username/myfile.ncwhere you need to set properly your github username);UERRA regional reanalysis: this example shows how to access data cubes from the ADAM platform via python.