Urban Heat Island effect in Fortaleza, Brazil

Contents

Table of Contents¶

1. Introduction ¶

This work intends to demonstrate the occurrence of the urban heat island phenomenon over the city of Fortaleza (Brazil). We start with aggregating bibliography and other relevant documents/data (ERA5-Land). We access ERA5-Land from the RELIANCE datacube resources, by using the functionalities provided in the Adam API.

Questions

Is it possible to quantify the existence of an Urban Heat Island Effect in Fortaleza (Brazil) with ERA5-Land?

Is there any correlation with local measurements available?

How has the situation changed over the last decades?

2. Data Wrangling ¶

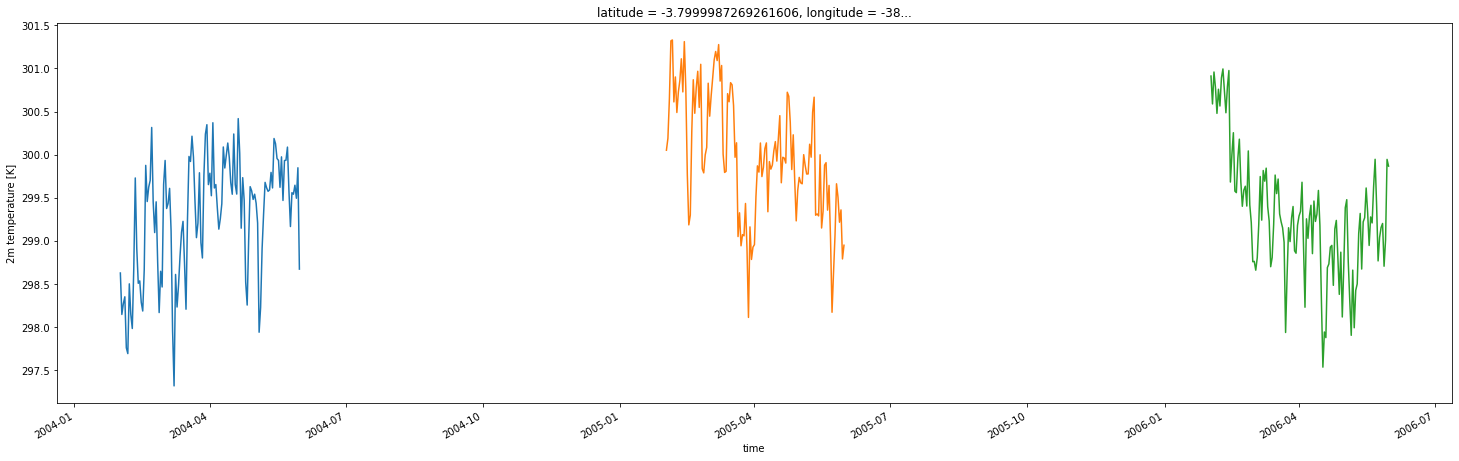

This study will compare 2 meter temperature from ERA5-Land from 3 different sites in the city of Fortaleza and its surroundings:

MWS UFC: 3 44’ 20’’ S; 38 35’ 37’’ W

AWS FOR 3 47’ 42’’ S; 38 33’ 30’’ W

AWS SGA 3 39’ 83’’ S; 38 56’ 32’’ W

Initialization¶

Choose a country and add its name and country code

Choose the variable to analyze (2M Temperature, etc.)

Choose the area for your analysis

Choose the country of interest¶

country_code = 'BR'

country_fullname = "Brazil"

variable_name = '2M_TEMPERATURE'

variable_unit = 'K'

variable_long_name = '2m temperature'

Geojson for selecting data¶

The geometry field is extracted from a GeoJSON file, retrieving the value of the “feature” element.

To create a geojson file for the area of interest, you can use https://geojson.io/

Then paste the result below in the geojson variable

geojson = """{

"type": "FeatureCollection",

"features": [

{

"type": "Feature",

"properties": {},

"geometry": {

"type": "Polygon",

"coordinates": [

[

[

-39,

-4.48

],

[

-38,

-4.48

],

[

-38,

-3.39

],

[

-39,

-3.39

],

[

-39,

-4.48

]

]

]

}

}

]

}"""

Organize my data¶

Define a prefix for my project (you may need to adjust it for your own usage on your infrastructure).

input folder where all the data used as input to my Jupyter Notebook is stored (and eventually shared)

output folder where all the results to keep are stored

tool folder where all the tools, including this Jupyter Notebook will be copied for sharing

Create all corresponding folders

import os

import pathlib

PREFIX_PATH = os.getenv("HOME") + "/datahub/Reliance/"

WORKDIR_FOLDER = PREFIX_PATH + "UHI-ERA5-Land"

print("WORKDIR FOLDER: ", WORKDIR_FOLDER)

INPUT_DATA_DIR = os.path.join(WORKDIR_FOLDER, 'input')

OUTPUT_DATA_DIR = os.path.join(WORKDIR_FOLDER, 'output')

TOOL_DATA_DIR = os.path.join(WORKDIR_FOLDER, 'tool')

list_folders = [INPUT_DATA_DIR, OUTPUT_DATA_DIR, TOOL_DATA_DIR]

for folder in list_folders:

pathlib.Path(folder).mkdir(parents=True, exist_ok=True)

WORKDIR FOLDER: /home/jovyan/datahub/Reliance/UHI-ERA5-Land

Geojson file for selecting data from ADAM¶

We dissolve geojson in case we have more than one polygon and then save the results into a geojson file

import geopandas as gpd

local_path_geom = os.path.join(INPUT_DATA_DIR, country_code.lower() + '.geo.json')

local_path_geom

'/home/jovyan/datahub/Reliance/UHI-ERA5-Land/input/br.geo.json'

if (pathlib.Path(local_path_geom).exists()):

os.remove(local_path_geom)

f = open(local_path_geom, "w")

f.write(geojson)

f.close()

data = gpd.read_file(local_path_geom)

single_shape = data.dissolve()

if (pathlib.Path(local_path_geom).exists()):

os.remove(local_path_geom)

single_shape.to_file(local_path_geom, driver='GeoJSON')

Import python packages¶

Authentication to ADAM-Platform to get ERA5-Land 2 meter Temperature variable¶

Get the data requried for the analysis. The following lines of code will show the personal Adam API-Key of the user and the endpoint currently in use, that provide access to the products in the related catalogue. At the end of the execution, if the authentication process is successfull the personal token and the expiration time should be returned as outputs.

pip install adamapi

Requirement already satisfied: adamapi in /opt/conda/lib/python3.8/site-packages (2.0.11)

Requirement already satisfied: imageio in /opt/conda/lib/python3.8/site-packages (from adamapi) (2.9.0)

Requirement already satisfied: requests>=2.22.0 in /opt/conda/lib/python3.8/site-packages (from adamapi) (2.25.1)

Requirement already satisfied: idna<3,>=2.5 in /opt/conda/lib/python3.8/site-packages (from requests>=2.22.0->adamapi) (2.10)

Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/lib/python3.8/site-packages (from requests>=2.22.0->adamapi) (2021.10.8)

Requirement already satisfied: urllib3<1.27,>=1.21.1 in /opt/conda/lib/python3.8/site-packages (from requests>=2.22.0->adamapi) (1.26.4)

Requirement already satisfied: chardet<5,>=3.0.2 in /opt/conda/lib/python3.8/site-packages (from requests>=2.22.0->adamapi) (4.0.0)

Requirement already satisfied: pillow in /opt/conda/lib/python3.8/site-packages (from imageio->adamapi) (8.1.2)

Requirement already satisfied: numpy in /opt/conda/lib/python3.8/site-packages (from imageio->adamapi) (1.19.5)

Note: you may need to restart the kernel to use updated packages.

Get ADAM Key from a local file¶

User would need to create this file to run the notebook.

adam_key = open(os.path.join(os.environ['HOME'],"adam-key")).read().rstrip()

import adamapi as adam

a = adam.Auth()

a.setKey(adam_key)

a.setAdamCore('https://reliance.adamplatform.eu')

a.authorize()

{'expires_at': '2022-02-28T12:48:29.583Z',

'access_token': '0602a77c216948f09105623c28db6875',

'refresh_token': '9aa08c2019c042db82075b4ef18aa31e',

'expires_in': 3600}

Access to Product¶

The products discovery operation related to a specific dataset is implemented in the Adam API with the getProducts() function. A combined spatial and temporal search can be requested by specifying the datasetId for the selected dataset, the geometry argument that specifies the Area Of Interest, and a temporal range defined by startDate and endDate . The geometry must always be defined by a GeoJson object that describes the polygon in the counterclockwise winding order. The optional arguments startIndex and maxRecords can set the list of the results returned as an output. The results of the search are displayed with their metadata and they are sorted starting from the most recent product.

Data Access¶

pip install geojson_rewind

Requirement already satisfied: geojson_rewind in /opt/conda/lib/python3.8/site-packages (1.0.2)

Note: you may need to restart the kernel to use updated packages.

from geojson_rewind import rewind

import json

The GeoJson object needs to be rearranged according to the counterclockwise winding order. This operation is executed in the next few lines to obtain a geometry that meets the requirements of the method. Geom_1 is the final result to be used in the discovery operation.

with open(local_path_geom) as f:

geom_dict = json.load(f)

output = rewind(geom_dict)

geom_1 = str(geom_dict['features'][0]['geometry'])

start_year = '2004'

end_year = '2006'

It is possible to access the data with the getData function. Each product in the output list intersects the selected geometry and the following example shows how to access a specific product from the list of results obtained in the previous step. While the datasetId is always a mandatory parameter, for each data access request the getData function needs only one of the following arguments: geometry or productId , that is the value of the _id field in each product metadata. In the case of a spatial and temporal search the geometry must be provided to the function, together with the time range of interest. The output of the getData function is always a .zip file containing the data retrieved with the data access request, providing the spatial subset of the product. The zip file will contain a geotiff file for each of the spatial subsets extracted in the selected time range.

Define a function to select a time range and get data¶

def getZipData(auth, dataset_info):

if not (pathlib.Path(pathlib.Path(dataset_info['outputFname']).stem).exists() or pathlib.Path(dataset_info['outputFname']).exists()):

data=adam.GetData(auth)

image = data.getData(

datasetId = dataset_info['datasetID'],

startDate = dataset_info['startDate'],

endDate = dataset_info['endDate'],

geometry = dataset_info['geometry'],

outputFname = dataset_info['outputFname'])

print(image)

Get variable of interest for each day from start_date to end_date¶

This process can take a bit of time so be patient!

import time

from IPython.display import clear_output

datasetID = '90866:ERA5_LAND_DAILY_2M_TEMPERATURE'

start = time.time()

for year in ['2004', '2005', '2006']:

datasetInfo = {

'datasetID' : datasetID,

'startDate' : year + '-02-01',

'endDate' : year + '-05-31',

'geometry' : geom_1,

'outputFname' : INPUT_DATA_DIR + '/' + variable_name + '_' + country_code + '_ADAMAPI_' + year + '.zip'

}

getZipData(a, datasetInfo)

end = time.time()

clear_output(wait=True)

delta1 = end - start

print('\033[1m'+'Processing time: ' + str(round(delta1,2)))

Processing time: 160.73

Data Analysis and Visualization of ERA5-Land 2 meter Temperature Dataset¶

Unzip data¶

import zipfile

def unzipData(filename, out_prefix):

with zipfile.ZipFile(filename, 'r') as zip_ref:

zip_ref.extractall(path = os.path.join(out_prefix, pathlib.Path(filename).stem))

for year in ['2004', '2005', '2006']:

filename = INPUT_DATA_DIR + '/' + variable_name + '_' + country_code + '_ADAMAPI_' + year + '.zip'

target_file = pathlib.Path(os.path.join(INPUT_DATA_DIR, pathlib.Path(pathlib.Path(filename).stem)))

if not target_file.exists():

unzipData(filename, INPUT_DATA_DIR)

Read data and analyze¶

import pandas as pd

import xarray as xr

import glob

from datetime import datetime

def paths_to_datetimeindex(paths):

return [datetime.strptime(date.split('_')[-1].split('.')[0], '%Y-%m-%dt%f') for date in paths]

def getData(dirtif, variable):

geotiff_list = glob.glob(dirtif)

# Create variable used for time axis

time_var = xr.Variable('time', paths_to_datetimeindex(geotiff_list))

# Load in and concatenate all individual GeoTIFFs

geotiffs_da = xr.concat([xr.open_rasterio(i, parse_coordinates=True) for i in geotiff_list],

dim=time_var)

# Covert our xarray.DataArray into a xarray.Dataset

geotiffs_da = geotiffs_da.to_dataset('band')

# Rename the dimensions to make it CF-convention compliant

geotiffs_da = geotiffs_da.rename_dims({'y': 'latitude', 'x':'longitude'})

# Rename the variable to a more useful name

geotiffs_da = geotiffs_da.rename_vars({1: variable, 'y':'latitude', 'x':'longitude'})

return geotiffs_da

geotiff_ds = getData( INPUT_DATA_DIR + '/' + variable_name + '_'+ country_code + '_ADAMAPI_20*/*.tif', variable_name)

geotiff_ds[variable_name].attrs = {'units' : variable_unit, 'long_name' : variable_long_name }

geotiff_ds

<xarray.Dataset>

Dimensions: (time: 361, latitude: 12, longitude: 11)

Coordinates:

* latitude (latitude) float64 -3.4 -3.5 -3.6 -3.7 ... -4.3 -4.4 -4.5

* longitude (longitude) float64 -39.0 -38.9 -38.8 ... -38.2 -38.1 -38.0

* time (time) datetime64[ns] 2006-05-31 2006-05-30 ... 2004-02-01

Data variables:

2M_TEMPERATURE (time, latitude, longitude) float32 300.5 nan ... 0.0 0.0

Attributes:

transform: (0.099999998304, 0.0, -39.04999939135999, 0.0, -0.0999999...

crs: +init=epsg:4326

res: (0.099999998304, 0.0999999986435015)

is_tiled: 0

nodatavals: (0.0,)

scales: (1.0,)

offsets: (0.0,)

AREA_OR_POINT: Area- time: 361

- latitude: 12

- longitude: 11

- latitude(latitude)float64-3.4 -3.5 -3.6 ... -4.3 -4.4 -4.5

array([-3.399999, -3.499999, -3.599999, -3.699999, -3.799999, -3.899999, -3.999999, -4.099999, -4.199999, -4.299999, -4.399999, -4.499999]) - longitude(longitude)float64-39.0 -38.9 -38.8 ... -38.1 -38.0

array([-38.999999, -38.899999, -38.799999, -38.699999, -38.599999, -38.499999, -38.399999, -38.299999, -38.199999, -38.099999, -37.999999]) - time(time)datetime64[ns]2006-05-31 ... 2004-02-01

array(['2006-05-31T00:00:00.000000000', '2006-05-30T00:00:00.000000000', '2006-05-29T00:00:00.000000000', ..., '2004-02-03T00:00:00.000000000', '2004-02-02T00:00:00.000000000', '2004-02-01T00:00:00.000000000'], dtype='datetime64[ns]')

- 2M_TEMPERATURE(time, latitude, longitude)float32300.5 nan nan nan ... 0.0 0.0 0.0

- units :

- K

- long_name :

- 2m temperature

array([[[300.49664, nan, nan, ..., nan, nan, 0. ], [300.3486 , nan, nan, ..., nan, nan, 0. ], [300.11526, 300.22955, 300.37643, ..., nan, nan, 0. ], ..., [296.18 , 296.55847, 297.82922, ..., 299.7611 , 300.08698, 0. ], [296.7994 , 297.7523 , 298.6772 , ..., 299.63922, 299.9673 , 0. ], [ 0. , 0. , 0. , ..., 0. , 0. , 0. ]], [[300.3957 , nan, nan, ..., nan, nan, 0. ], [300.3013 , nan, nan, ..., nan, nan, 0. ], [300.14047, 300.20996, 300.31433, ..., nan, nan, 0. ], ... [295.85675, 296.05475, 296.94882, ..., 298.1941 , 298.40802, 0. ], [296.3764 , 296.96112, 297.62833, ..., 298.2041 , 298.41046, 0. ], [ 0. , 0. , 0. , ..., 0. , 0. , 0. ]], [[298.8412 , nan, nan, ..., nan, nan, 0. ], [298.79092, nan, nan, ..., nan, nan, 0. ], [298.8256 , 298.78882, 298.80072, ..., nan, nan, 0. ], ..., [296.15308, 296.4076 , 297.2995 , ..., 298.6153 , 298.80106, 0. ], [296.51883, 297.1657 , 297.86975, ..., 298.6005 , 298.80087, 0. ], [ 0. , 0. , 0. , ..., 0. , 0. , 0. ]]], dtype=float32)

- transform :

- (0.099999998304, 0.0, -39.04999939135999, 0.0, -0.0999999986435015, -3.3499987330304037)

- crs :

- +init=epsg:4326

- res :

- (0.099999998304, 0.0999999986435015)

- is_tiled :

- 0

- nodatavals :

- (0.0,)

- scales :

- (1.0,)

- offsets :

- (0.0,)

- AREA_OR_POINT :

- Area

geotiff_ds.to_netcdf(OUTPUT_DATA_DIR + '/' + variable_name + '_'+ country_code + '_ADAMAPI.netcdf')

Read dataset locally¶

geotiff_ds = xr.open_dataset(OUTPUT_DATA_DIR + '/' + variable_name + '_'+ country_code + '_ADAMAPI.netcdf')

geotiff_ds

<xarray.Dataset>

Dimensions: (time: 361, latitude: 12, longitude: 11)

Coordinates:

* latitude (latitude) float64 -3.4 -3.5 -3.6 -3.7 ... -4.3 -4.4 -4.5

* longitude (longitude) float64 -39.0 -38.9 -38.8 ... -38.2 -38.1 -38.0

* time (time) datetime64[ns] 2006-05-31 2006-05-30 ... 2004-02-01

Data variables:

2M_TEMPERATURE (time, latitude, longitude) float32 300.5 nan ... 0.0 0.0

Attributes:

transform: [ 0.1 0. -39.04999939 0. -0....

crs: +init=epsg:4326

res: [0.1 0.1]

is_tiled: 0

nodatavals: 0.0

scales: 1.0

offsets: 0.0

AREA_OR_POINT: Area- time: 361

- latitude: 12

- longitude: 11

- latitude(latitude)float64-3.4 -3.5 -3.6 ... -4.3 -4.4 -4.5

array([-3.399999, -3.499999, -3.599999, -3.699999, -3.799999, -3.899999, -3.999999, -4.099999, -4.199999, -4.299999, -4.399999, -4.499999]) - longitude(longitude)float64-39.0 -38.9 -38.8 ... -38.1 -38.0

array([-38.999999, -38.899999, -38.799999, -38.699999, -38.599999, -38.499999, -38.399999, -38.299999, -38.199999, -38.099999, -37.999999]) - time(time)datetime64[ns]2006-05-31 ... 2004-02-01

array(['2006-05-31T00:00:00.000000000', '2006-05-30T00:00:00.000000000', '2006-05-29T00:00:00.000000000', ..., '2004-02-03T00:00:00.000000000', '2004-02-02T00:00:00.000000000', '2004-02-01T00:00:00.000000000'], dtype='datetime64[ns]')

- 2M_TEMPERATURE(time, latitude, longitude)float32...

- units :

- K

- long_name :

- 2m temperature

array([[[300.49664, nan, ..., nan, 0. ], [300.3486 , nan, ..., nan, 0. ], ..., [296.7994 , 297.7523 , ..., 299.9673 , 0. ], [ 0. , 0. , ..., 0. , 0. ]], [[300.3957 , nan, ..., nan, 0. ], [300.3013 , nan, ..., nan, 0. ], ..., [297.2068 , 297.98944, ..., 300.23526, 0. ], [ 0. , 0. , ..., 0. , 0. ]], ..., [[298.30862, nan, ..., nan, 0. ], [298.2385 , nan, ..., nan, 0. ], ..., [296.3764 , 296.96112, ..., 298.41046, 0. ], [ 0. , 0. , ..., 0. , 0. ]], [[298.8412 , nan, ..., nan, 0. ], [298.79092, nan, ..., nan, 0. ], ..., [296.51883, 297.1657 , ..., 298.80087, 0. ], [ 0. , 0. , ..., 0. , 0. ]]], dtype=float32)

- transform :

- [ 0.1 0. -39.04999939 0. -0.1 -3.34999873]

- crs :

- +init=epsg:4326

- res :

- [0.1 0.1]

- is_tiled :

- 0

- nodatavals :

- 0.0

- scales :

- 1.0

- offsets :

- 0.0

- AREA_OR_POINT :

- Area

Sites¶

MWS UFC: 3 44’ 20’’ S; 38 35’ 37’’ W

AWS FOR 3 47’ 42’’ S; 38 33’ 30’’ W

AWS SGA 3 39’ 83’’ S; 38 56’ 32’’ W

aws_for = geotiff_ds.sel(latitude=-3.795, longitude=-38.5583, method='nearest')

aws_for

<xarray.Dataset>

Dimensions: (time: 361)

Coordinates:

latitude float64 -3.8

longitude float64 -38.6

* time (time) datetime64[ns] 2006-05-31 2006-05-30 ... 2004-02-01

Data variables:

2M_TEMPERATURE (time) float32 299.9 299.9 299.0 298.7 ... 298.3 298.1 298.6

Attributes:

transform: [ 0.1 0. -39.04999939 0. -0....

crs: +init=epsg:4326

res: [0.1 0.1]

is_tiled: 0

nodatavals: 0.0

scales: 1.0

offsets: 0.0

AREA_OR_POINT: Area- time: 361

- latitude()float64-3.8

array(-3.79999873)

- longitude()float64-38.6

array(-38.5999994)

- time(time)datetime64[ns]2006-05-31 ... 2004-02-01

array(['2006-05-31T00:00:00.000000000', '2006-05-30T00:00:00.000000000', '2006-05-29T00:00:00.000000000', ..., '2004-02-03T00:00:00.000000000', '2004-02-02T00:00:00.000000000', '2004-02-01T00:00:00.000000000'], dtype='datetime64[ns]')

- 2M_TEMPERATURE(time)float32299.9 299.9 299.0 ... 298.1 298.6

- units :

- K

- long_name :

- 2m temperature

array([299.86777, 299.9458 , 299.00946, ..., 298.27283, 298.14728, 298.6281 ], dtype=float32)

- transform :

- [ 0.1 0. -39.04999939 0. -0.1 -3.34999873]

- crs :

- +init=epsg:4326

- res :

- [0.1 0.1]

- is_tiled :

- 0

- nodatavals :

- 0.0

- scales :

- 1.0

- offsets :

- 0.0

- AREA_OR_POINT :

- Area

import matplotlib.pyplot as plt

fig=plt.figure(figsize=(25,7))

for year in [2004, 2005, 2006]:

aws_for['2M_TEMPERATURE'].sel(time=aws_for.time.dt.year.isin([year])).plot()