EOSC Nordic WP5 tasks and status

We describe below WP5 tasks as defined in the submitted application. Then we give an overview of the status at the start of the project (state of the art), then the current status of each task. Status of each task is regularly updated (add new section with a new date).

EOSC Nordic Task 5.2.1

T5.2.1: Cross-border data processing workflows (M1-36) - Lead: UT/ETAIS Participants: UIO-USIT/SIGMA2,

UICE, SNIC, UGOT/GGBC, FMI, SU/SNIC, UIO-GEO/SIGMA2 In this subtask, we will facilitate data pre- and post-processing workflows (High Performance computation or High Throughput computation) on distributed data and computing resources by enabling community specific or thematic portals, such as PlutoF and Galaxy-based geoportal, traditionally designed to submit jobs on local clusters, to allow scheduling of jobs on remote resources. The API modules that will be developed to support the most commonly and widely used Distributed Resource Managers (DRMs) will be designed to become a generic solution, i.e. independent from the architecture and the technology of any given portal.

What is known/available at the start of the project

Here we list what is relevant for the Climate science demonstrator only.

- Galaxy pulsar for wide job execution system distributed across several European datacenters, allowing to scale Galaxy instances computing power over heterogeneous resources.

- Galaxy workflows are text files that can be easily exchanged. Galaxy workflows can be searched per Galaxy instances. For instance on Galaxy Europe, shared workflows are published.

- Galaxy shared histories. Galaxy allows users to share their “histories” (data, processing, etc.) via a link. Users can set permissions to restrict access to a group of users if necessary (or a single user).

Status: January 2020

Preliminary list of tasks to enable T5.2.1:

- The plan is to install one single Galaxy instance for all the Nordics. Pulsar will be used to submit jobs on various platforms (HPCs and cloud computing). The objective is to use a similar setting as the one used by Galaxy Europe to ease maintenance and facilitate deployment of new tools by the Climate community,

- The list of Galaxy tools available/needed is provided and maintained by the Climate community. T5.2.1 will install Galaxy tools that are made available in the Galaxy Toolshed or available as interactive environment in the Galaxy Europe github repository.

- The list of available/needed training material is also provided and maintained by the Climate community (NICEST2). T5.2.1 will install Galaxy tools and datasets (Galaxy data libraries) necessary for users to use these training material on the Nordic Galaxy instance.

Status: April 2020

Galaxy Training material

New training material under review for publication:

New training material under development:

- Climate 101 is in preparation on NordicESMHub; corresponding PR.

New training material planned:

- Analyzing CMIP6 data with Galaxy Climate JupyterLab (in preparation; NICEST2 - not started yet)

- ESMValTool with Galaxy Climate JupyterLab (in preparation; NICEST2 - not started yet)

- Running CESM with Galaxy Climate JupyterLab (in preparation; it will be based on GEO4962, a course that is regularly given at the University of Oslo. See GEO4962).

Status: May 2020

Galaxy Training material

New training materials published:

New training material planned:

Galaxy climate workbench framework for EOSC-Nordic

More information on Galaxy climate workbench can be found here.

- Galaxy tools for Climate Analysis

- Galaxy Training material for Climate Analysis. This page is regularly updated to reflect status and progress.

EOSC Nordic Task 5.2.2

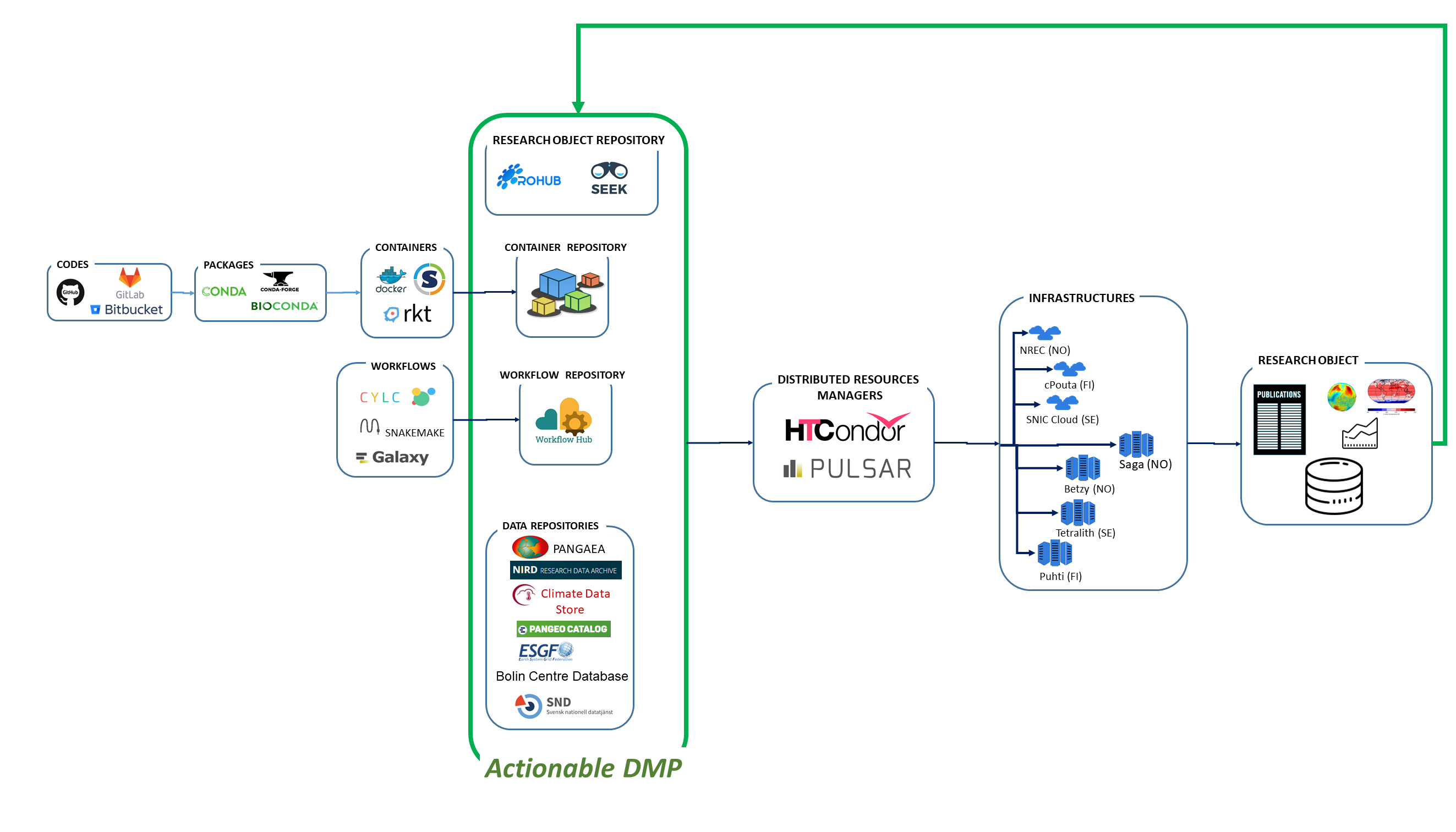

T5.2.2: Code Repositories, Containerization and “virtual laboratories” (M1-36) - Lead: SIGMA2 Participants:

UICE, CSC, UIO-GEO/SIGMA2, UIO-INF/SIGMA2, UH In this subtask, we will pilot solutions for cross-borders “virtual laboratories” to allow researchers to work in a common software and data environment regardless which computing infrastructure the analysis is performed on, thus ensuring the highest reproducibility of the results. The work will encompass evaluations of different Docker Hub technologies provided by the EOSC-hub as well as mechanisms for build automation, package management and containerization. The subtask will focus on building a natural language processing laboratory, but the overall goal will be to create a generic recipe for building virtual laboratories.

What is known/available at the start of the project

- Tools developed in the framework of Galaxy are available in the NordicESMHub github organization as galaxy-tools github repository.

- conda package manager has been used by the Norwegian Climate community for packaging tools (for instance cesm) in bioconda. conda-forge could be used too (but corresponding containers may not be created automatically).

- Each package added to Bioconda also has a corresponding Docker BioContainer automatically created and uploaded to Quay.io. A list of these and other containers can be found at the Biocontainers Registry. For instance, CESM bioconda container can be found here wit both docker and singularity containers available.

- Tools/models developed outside the Galaxy framework are stored in various places. We do not have a full overview yet.

Status: January 2020

Preliminary list of tasks to enable T5.2.2:

- Discussion on possible solutions for submitting jobs from Galaxy to different platforms (in Sweden and Norway). Pulsar seems to be the best solution for Galaxy. This is already what is used by Galaxy Europe where Galaxy Climate is currently deployed.

- Usage of conda package manager is recommended along with containers (as done with bioconda and biocontainers).

- There is no equivalent container community repository for climate: should we set up something similar to biocontainers?

Status: April 2020

Target backend systems have been identified:

Norway:

Sweden:

Discussion with SNIC has been initiated with Sweden for using HPC resources.

EOSC Nordic Task 5.3.1

T5.3.1: Integrated Data Management Workflows (M1-36) Lead: CSC – Participants: UIO-INF/SIGMA2, UH, SNIC,

UICE, UIO-GEO/SIGMA2, FMI, SIGMA2 This task will provide solutions for facilitating complex data workflows involving disciplines specific repositories, data sharing portals (such as Earth System Grid Federation, ESGF) and storage for active computing. An emerging HTTP API solution integrated with B2SAFE workflows will be adopted to streamline the creation of replicas of community specific data repositories towards the computing sites, where computations can be performed. This task will comprise also the adaptation of portals

What is known/available at the start of the project

- B2share Nordic

- B2safe

- CernVM File System (CernVM-FS)

- ownCloud

- Galaxy data libraries. Per instance (same as for Galaxy workflows) but possible to “replicate” between Galaxy instances through CVMFS.

- Earth Systm Grid Federation (ESGF)

Status: January 2020

CVFMS is used to replicate Galaxy reference data on any Galaxy instance. Look at Galaxy Reference Data with CVMFS tutorial for more information on the usage of CVMFS in Galaxy for deploying/replicating reference data. This approach is probably suitable for small climate datasets (for instance teaching datasets, in-situ observations) but is not appropriate for the bulk amount of climate data. We suggest to investigate other remote access solutions.

Preliminary list of tasks to enable T5.3.1:

- Prepare test dataset in zarr for parallel access through python.

- Create data catalog using intake. The goal will be to automatically create/update data catalog as new data is harvested (link to T5.3.2).

- Check esm-intake that is specific to CMIP and CESM Large Ensemble Community Project

- Install ownCloud on NIRD research data project area to test access and processing of climate data from Galaxy (using intake catalog and zarr data format).

Status: April 2020

- Preliminary tests using ownCloud have been successful. However, no performance analysis has been performed yet. So far we only tested the functionalities. Larger datasets will be harvested for further testing.

Status: June 2020

- A larger dataset has been uploaded to ownCloud (by Adil) and we have started new tests. This new dataset will be used for developing a new training material (on Galaxy) for learning to use and analyze Community Earth System Model.

- intake catalog for accessing dataset: it is a

yamlfile where we specify how and where to access the dataset. Ideally, catalogs will be archived (NIRD archive, zenodo or other) with metadata from the dataset (to be searchable and findable).- simple python jupyter notebook to illustrate the usage of the dataset.

- Example to compute monthly average.

- Example to create zarray from netCDF file.

- Training material (under development). Information on how to render the page locally can be found here.

Climate data relevant for EOSC-Nordic

The list of data relevant for the Climate community can be found here.

EOSC Nordic Task 5.3.2

T5.3.2: Machine actionable DMPs (M1-36) Lead: SIGMA2 – Participants: GFF, SNIC, UGOT/SND

Link DMP with storage & computing resource allocations.

What is known/available at the start of the project

- Storage and computing resource allocation are usually based on scientific merit. Project managers submit storage and/or compute applications that are usually evaluated and granted by a resource allocation committee.

- Data Management Plans are usually requested but not necessarily mandatory. In addition, DMPs are usually very little assessed and monitored during the lifetime of a project.

Status: January 2020

Preliminary list of tasks to enable T5.3.2:

- This task has not started yet.

Status: April 2020

- Brainstorming activity to narrow down what we can do during EOSC-Nordic: the goal will be to “link” DMPs (for instance Easy DMP) with resource allocation.

Status: May 2020

- A first draft for EOSC-Nordic Climate Science roadmap has been released: